Web Performance: Understanding Caching Layers

Cache is a storage location that keeps copies of frequently accessed data to allow quicker access compared to retrieving from original sources.

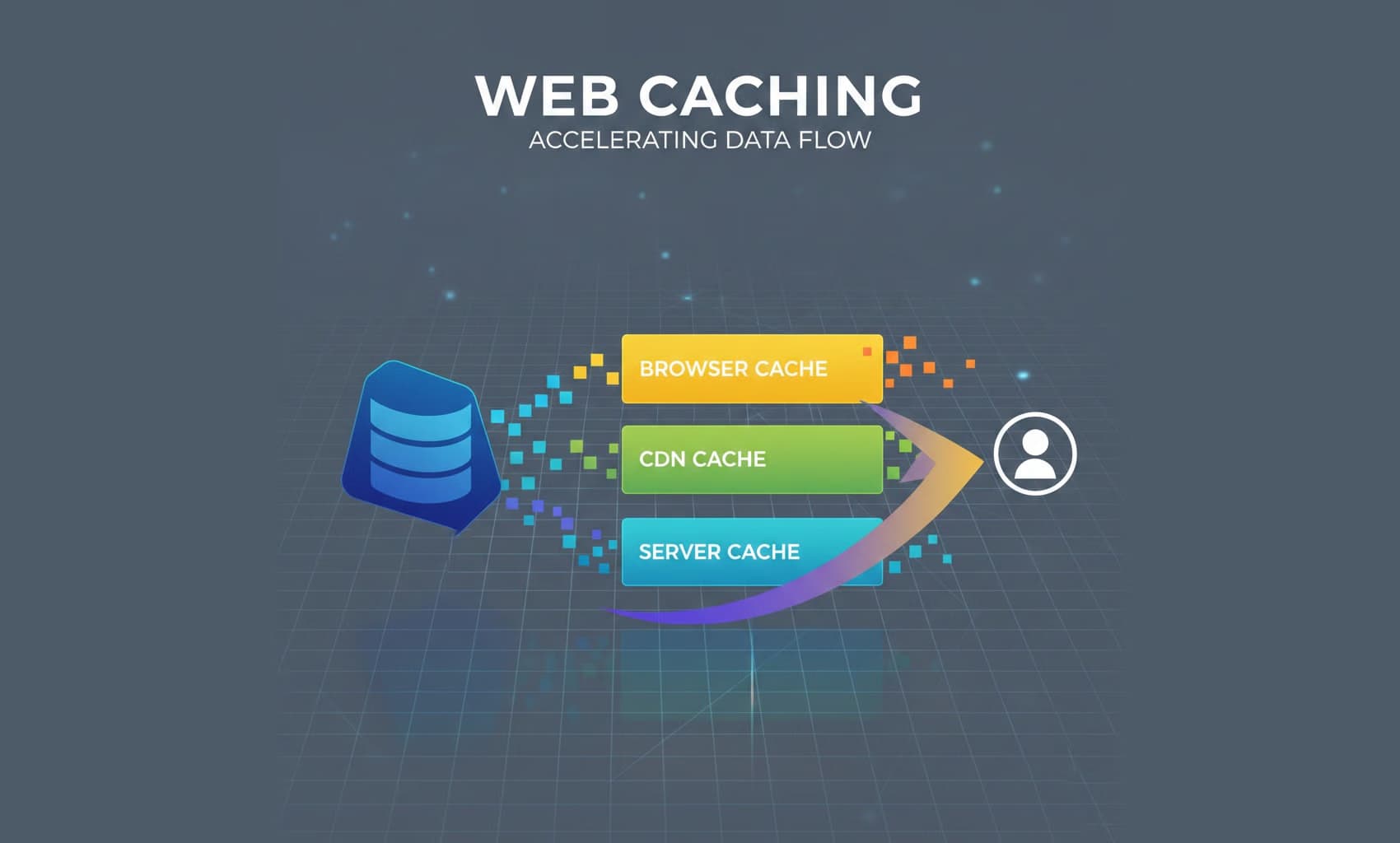

Caching is essential for making modern web apps fast and scalable. It saves servers from repeating the same work and makes web apps faster for users. There are multiple cache layers (client, CDN, server/reverse proxy, application stores) each with its own tradeoffs.

This article discusses caching approaches for a modern stack (Cloudflare, Nginx, Redis, Next.js), providing an overview of caching layers and their purposes to help developers understand how multi-layer caching works.

The Caching Strategy

Effective caching is a series of layers. If a request falls through one layer, the next should catch it. This approach not only accelerates response times but also enhances scalability and resilience.

CDN (Cloudflare)

It intercepts requests before they even reach your infrastructure. This is your first line of defense. CDN sits geographically closer to your user than your server does.

For optimal performance, static assets like images, CSS, and JavaScript files should be cached aggressively to minimize load times and offload traffic from your servers. For HTML pages and dynamic content caching should be more conservative, with short TTLs (Time To Live) to ensure users see up-to-date content.

The Gateway (Nginx)

The goal of caching at this level is to protect your application server from repetitive load. If a CDN is unavailable or misses a request, Nginx can act as a protective layer to reduce server stress.

When your server is behind a CDN, static assets are already cached at the edge. However, caching dynamic GET requests for 1-10 seconds protects against traffic spikes, where thousands of users might request the same resource simultaneously. You may also want to protect against 404 floods, but take into account that caching 404s for 1 minute can mask newly created resources. An example of Nginx configuration for this scenario:

nginx1proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_cache:10m max_size=1g inactive=60m use_temp_path=off;23server {4 location / {5 proxy_cache my_cache;6 proxy_cache_valid 200 302 10s; # Cache successful responses for 10 seconds7 proxy_cache_valid 404 1m; # Protect against 404 floods8 proxy_cache_use_stale error timeout updating http_500; # Serve stale content if app crashes9 add_header X-Cache-Status $upstream_cache_status; # Debugging aid10 }11}

If your application does not use a CDN, Nginx becomes your primary cache layer. In this case, static assets should be cached for a long duration to maximize performance, but HTML and other dynamic content should use shorter TTLs to ensure users receive up-to-date pages.

Application Data (Redis)

To avoid unnecessary database queries or repeated third-party API calls, cache results of expensive operations. The application first checks Redis: if the data is present, it is returned immediately, if the data isn't cached, the application fetches the data from the database or external API, stores it in Redis with an appropriate TTL, and then returns the result.

Redis is well suited for storing user sessions, the results of complex SQL queries such as “Top 10 Best Sellers,” and responses from external APIs like exchange rate providers. This reduces latency, lowers database load, and improves performance.

The Client (Browser)

The Cache-Control header, sent in a server response, tells the browser how to cache content. The immutable directive tells the browser that a file will never change, which is ideal for hashed build assets. The stale-while-revalidate directive allows it to display the cached version instantly while fetching an updated copy in the background, ensuring fast delivery without sacrificing freshness.

Modern build tools and frameworks rely heavily on browser caching. Whether you use Next.js or a traditional Vite + React setup, their builds contain hashed static assets, which the browser can cache indefinitely with immutable directive.

Next.js additionally uses a multi-layer caching strategy to optimize performance:

Cache Components (new in Next.js 16): The use cache directive allows caching results of async functions and components. It allows you to serve fast, cached responses while still updating content when it actually changes.

Data Cache: A cache that persists across user requests and deployments. Next.js extends the native fetch API to allow granular caching control per request. In Next.js 16, caching is disabled by default and must be explicitly enabled using cache: 'force-cache'.

Full Route Cache - Next.js automatically renders and caches routes at build time, serving static HTML instead of re-rendering on the server for every request.

Router Cache When a user navigates between routes, Next.js caches the visited route segments and prefetches the routes the user is likely to navigate to. This results in instant back/forward navigation and no full-page reload between navigations.

Common Pitfalls and Mistakes

- Misconfigured headers can serve stale or private data at scale.

- Make sure cache keys don't include user-specific data like auth cookies.

- Use purge/revalidation as part of your CI/CD to avoid stale content being served.

Cache Invalidation is a hard problem, but manageable with the right approach. Always set sensible expirations (TTL), purge caches when data change, tag related cache entries for bulk invalidation where supported.

Test headers and CDN rules. One misplaced header or an inappropriately included cookie can disable or corrupt caching behavior.

Summary

Effective caching requires understanding each layer's role. Start with aggressive edge caching, add short-duration server caching for spike protection, cache expensive operations in Redis, and leverage browser caching with proper headers.

The goal: minimize redundant work at every level.